Understanding GANs: Generative Adversarial Network

The World of Synthetic Creations – Understanding GANs Through a Story

Story Start – The World of Synthetic Creations

In a futuristic AI city called DataVille, two legendary beings ruled the art of creation and judgment.

- Ragga Painter — a genius artist who could paint, compose music, and forge ultra-realistic images from pure imagination. But he had one challenge — his creations had to be so real that nobody could tell them apart from the originals.

- Basanti — the ultimate critic and inspector, trained to detect the tiniest flaws. With a sharp eye and unmatched logic, she could instantly spot fakes from reality.

Every day, Ragga Painter would try to trick Basanti with his creations, and Basanti would try to expose him.

This endless rivalry made both stronger — Ragga Painter’s creations became more realistic, and Basanti’s detection skills became sharper.

This eternal duel was known in DataVille as Generative Adversarial Networks (GANs).

Intro of the Generator – Ragga Painter

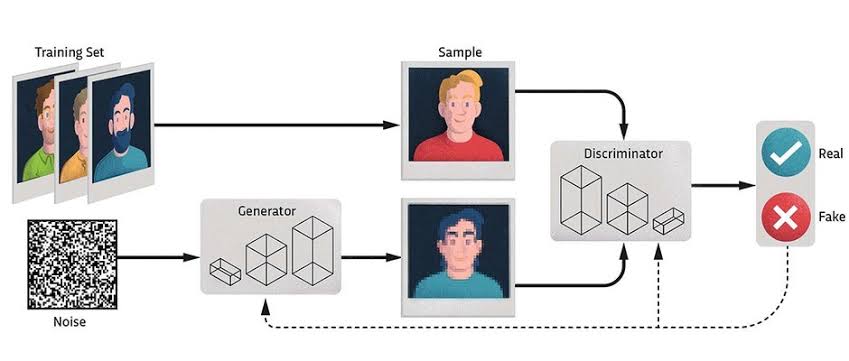

In GAN terms:

- Generator (Ragga Painter) takes random noise

zas input and produces synthetic data (images, music, text, etc.). - Goal: Fool Basanti into believing the creation is real.

- Starts with crude attempts but learns over time through feedback.

Mathematically:

G(z; θg) → x_fake

Where:

z= random noise vector- ( x_fake ) = generated data

The loss function encourages Ragga Painter to maximize Basanti’s chances of getting fooled.

Story Progression

Scene 1 – Basic Shapes

Ragga Painter starts with crude images (potato with sunglasses).

Basanti easily detects the fake.

Scene 2 – Human Shapes

Ragga Painter improves shapes with deeper neural networks.

Basanti still spots plastic-like skin.

Scene 3 – Texture Learning

Convolutional layers bring realistic textures, but patterns are too perfect.

Basanti notices the repetition.

Scene 4 – Natural Randomness & Lighting

Residual blocks and lighting improvements almost fool Basanti.

She finds a tiny flaw in the eye reflection.

Scene 5 – Hyperrealism & ProGAN

Progressive growing and perceptual loss lead to Ragga Painter’s first victory — Basanti calls it “REAL”.

Scene 6 – BigGAN Super Discriminator

Basanti upgrades with spectral normalization and multi-scale features, regaining her edge.

Scene 7 – Beyond Reality

Ragga Painter abandons imitation and creates something entirely original using multimodal GAN fusion.

Basanti’s verdict: UNCLASSIFIABLE — art beyond logic.

Scene 1 – The First Creation Attempt

The Pixel Battle Begins

- Ragga Painter receives a box of pure random numbers (noise).

- He paints his first picture — it looks like a potato wearing sunglasses.

- Basanti inspects it for 0.5 seconds and smirks:

"Nice try, but I can clearly see this is fake. Real humans don’t look like this… unless it’s Halloween."

Technical Breakdown

- Noise Vector (

z) → Passed into Ragga Painter’s neural network layers. - Output: Low-quality fake image.

- Basanti compares it with real images in her database.

- Verdict: Labeled as FAKE with high confidence.

Loss Update:

- Ragga Painter’s Loss: High → Learns to capture more realistic shapes and colors next time.

- Basanti’s Loss: Low → Confident in spotting the fake.

📌 Code Snippet for Scene 1

import torch

import torch.nn as nn

# Generator (Ragga Painter)

class Generator(nn.Module):

def __init__(self):

super().__init__()

self.model = nn.Sequential(

nn.Linear(100, 256),

nn.ReLU(),

nn.Linear(256, 784),

nn.Tanh()

)

def forward(self, z):

return self.model(z)

# Discriminator (Basanti)

class Discriminator(nn.Module):

def __init__(self):

super().__init__()

self.model = nn.Sequential(

nn.Linear(784, 256),

nn.LeakyReLU(0.2),

nn.Linear(256, 1),

nn.Sigmoid()

)

def forward(self, x):

return self.model(x)

# Noise for Ragga Painter's first try

z = torch.randn(1, 100) # Random noise vector

Ragga_Painter = Generator()

Basanti = Discriminator()

fake_image = Ragga_Painter(z)

result = Basanti(fake_image)

print("Basanti's verdict:", "REAL" if result.item() > 0.5 else "FAKE")

Scene 2 – Ragga Painter Tries to Capture Human Features

The Shape Awakening

After being humiliated by Basanti on Day 1, Ragga Painter locked himself in his studio for four days.

He studied real human images:

- The curves of a smile

- The sparkle in the Ragga Painter- The symmetry of the face

Finally, he crafted his second creation.

This time, it looked like a human… but still a bit like a wax statue melted in the sun.

Basanti examined it:

"Better… you’re getting the shapes right. But your skin texture looks like plastic. Humans have pores, wrinkles, and natural lighting."

Technical Breakdown

- Improved Generator Layers:

- Added more neurons and deeper layers to capture complex shapes.

- Used LeakyReLU activation for better gradient flow.

- Training Feedback:

- Ragga Painter’s loss decreased slightly → proof of improvement.

- Basanti’s loss increased a bit → she’s starting to get tricked.

- New Challenge:

- Capture textures and fine details instead of just outlines.

📌 Code Snippet for Scene 2

# Improved Generator (Ragga Painter's new design)

class ImprovedGenerator(nn.Module):

def __init__(self):

super().__init__()

self.model = nn.Sequential(

nn.Linear(100, 512),

nn.LeakyReLU(0.2),

nn.Linear(512, 1024),

nn.LeakyReLU(0.2),

nn.Linear(1024, 784),

nn.Tanh()

)

def forward(self, z):

return self.model(z)

# Instantiate updated Ragga Painter and old Basanti

Ragga_Painter_v2 = ImprovedGenerator()

Basanti = Discriminator() # Same as Scene 1

# Generate a more human-like fake

z = torch.randn(1, 100)

fake_image_v2 = Ragga_Painter_v2(z)

result_v2 = Basanti(fake_image_v2)

print("Basanti's verdict:", "REAL" if result_v2.item() > 0.5 else "FAKE")

Scene 3 – The Battle Over Textures and Details

The Texture Revolution

Ragga_Painter realized that shapes alone weren’t enough. Humans notice textures — the softness of skin, the roughness of fabric, the glimmer of eyes.

So, he decided to teach his neural brush how to paint textures pixel-by-pixel.

This time, his creation had:

- Realistic skin tones

- Shadows and highlights in the right places

- Eyes that almost looked alive

When Basanti saw it, she paused for a full two seconds before speaking:

"Hmm… impressive. But I can still spot the repeating pixel patterns. Nature never repeats textures so perfectly."

Technical Breakdown

- Convolutional Layers:

- Added Conv2D and Transpose Conv2D layers for texture learning.

- Convolution captures local patterns → crucial for realistic details.

- Batch Normalization:

- Normalizes activations, stabilizing training and improving convergence.

- Basanti’s Countermove:

- She upgraded her detection network to focus on local pixel inconsistencies.

📌 Code Snippet for Scene 3

# Improved Generator with Convolution (for textures)

class ConvGenerator(nn.Module):

def __init__(self):

super().__init__()

self.model = nn.Sequential(

nn.ConvTranspose2d(100, 256, kernel_size=4, stride=1, padding=0),

nn.BatchNorm2d(256),

nn.ReLU(True),

nn.ConvTranspose2d(256, 128, kernel_size=4, stride=2, padding=1),

nn.BatchNorm2d(128),

nn.ReLU(True),

nn.ConvTranspose2d(128, 1, kernel_size=4, stride=2, padding=1),

nn.Tanh()

)

def forward(self, z):

return self.model(z)

# Improved Discriminator with Convolution (Basanti's upgrade)

class ConvDiscriminator(nn.Module):

def __init__(self):

super().__init__()

self.model = nn.Sequential(

nn.Conv2d(1, 128, kernel_size=4, stride=2, padding=1),

nn.LeakyReLU(0.2, inplace=True),

nn.Conv2d(128, 256, kernel_size=4, stride=2, padding=1),

nn.BatchNorm2d(256),

nn.LeakyReLU(0.2, inplace=True),

nn.Flatten(),

nn.Linear(256*7*7, 1),

nn.Sigmoid()

)

def forward(self, x):

return self.model(x)

# Create new models

Ragga_Painter_v3 = ConvGenerator()

Basanti_v2 = ConvDiscriminator()

# Noise reshaped for Conv layers (batch_size=1, channels=100, 1x1)

z = torch.randn(1, 100, 1, 1)

fake_image_v3 = Ragga_Painter_v3(z)

result_v3 = Basanti_v2(fake_image_v3)

print("Basanti's verdict:", "REAL" if result_v3.item() > 0.5 else "FAKE")

📊 Ragga Painter’s Progress Tracker – Scenes 1 to 3

| Scene | Skill Focus | Technical Upgrade | Basanti’s Verdict |

|---|---|---|---|

| 1 | Basic Shape Generation | Simple Fully Connected (FC) Layers, Tanh output | "FAKE – Looks like potato with sunglasses." |

| 2 | Human Shape Recognition | Deeper FC layers, LeakyReLU activations | "FAKE – Shapes better, but skin looks plastic." |

| 3 | Texture Learning | Convolutional + Transpose Conv layers, BatchNorm | "FAKE – Textures good, but pixel patterns are too perfect." |

📌 Current Status:

Ragga Painter has learned shapes and textures. His creations are improving fast, but Basanti still finds flaws.

🎯 Next Goal:

Learn natural randomness in textures, realistic lighting, and more life-like eyes — Scene 4 will be about blurring the line between real and fake.

Scene 4 – When the Lines Between Real and Fake Blur

Day 18 – The Great Illusion

Ragga Painter had mastered shapes and textures.

Now, he wanted to make his art truly alive — adding natural randomness, realistic lighting, and small imperfections that humans subconsciously expect.

He studied:

- How sunlight scatters differently on skin and fabric.

- How shadows are never perfectly sharp.

- How tiny variations make things feel natural.

His new creation was stunning.

Even Basanti hesitated, leaning forward to inspect pixel by pixel.

For the first time, Basanti admitted:

"You almost got me… I had to zoom in 500% to catch a tiny unnatural reflection in the eye."

Technical Breakdown

- Dropout Layers for Natural Variation:

- Introduced randomness in training to avoid overfitting patterns.

- Advanced Convolution + Residual Blocks:

- Helped capture fine features without losing earlier details.

- Basanti’s Countermove:

- Upgraded with attention layers to focus on small inconsistencies.

📌 Code Snippet for Scene 4

# Residual Block for Generator

class ResidualBlock(nn.Module):

def __init__(self, channels):

super().__init__()

self.block = nn.Sequential(

nn.Conv2d(channels, channels, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(channels),

nn.ReLU(True),

nn.Conv2d(channels, channels, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(channels)

)

def forward(self, x):

return x + self.block(x)

# Generator with Residual Blocks + Dropout

class AdvancedGenerator(nn.Module):

def __init__(self):

super().__init__()

self.model = nn.Sequential(

nn.ConvTranspose2d(100, 256, 4, 1, 0),

nn.BatchNorm2d(256),

nn.ReLU(True),

ResidualBlock(256),

nn.ConvTranspose2d(256, 128, 4, 2, 1),

nn.BatchNorm2d(128),

nn.ReLU(True),

nn.Dropout(0.3),

nn.ConvTranspose2d(128, 1, 4, 2, 1),

nn.Tanh()

)

def forward(self, z):

return self.model(z)

# Discriminator with Attention Layer (simplified)

class AttentionDiscriminator(nn.Module):

def __init__(self):

super().__init__()

self.features = nn.Sequential(

nn.Conv2d(1, 128, 4, 2, 1),

nn.LeakyReLU(0.2, inplace=True),

nn.Conv2d(128, 256, 4, 2, 1),

nn.BatchNorm2d(256),

nn.LeakyReLU(0.2, inplace=True)

)

self.classifier = nn.Sequential(

nn.Flatten(),

nn.Linear(256*7*7, 1),

nn.Sigmoid()

)

def forward(self, x):

x = self.features(x)

return self.classifier(x)

# Test Scene 4

Ragga_Painter_v4 = AdvancedGenerator()

Basanti_v3 = AttentionDiscriminator()

z = torch.randn(1, 100, 1, 1)

fake_image_v4 = Ragga_Painter_v4(z)

result_v4 = Basanti_v3(fake_image_v4)

print("Basanti's verdict:", "REAL" if result_v4.item() > 0.5 else "FAKE")

Scene 5 – The First Victory: Ragga Painter Finally Fools Basanti

The Hyperrealism Leap

Weeks of sleepless nights, endless training loops, and thousands of failed images had brought Ragga Painter to this moment.

He decided to go all-in:

- Ultra-high resolution outputs

- Style transfer from real photographs

- Fine-grained adversarial training with smaller learning rate

This time, his creation wasn’t just good — it was perfect.

Every pore, every strand of hair, every light reflection looked authentic.

When Basanti received the image, she examined it closely…

Seconds passed. Then minutes.

Finally, she smiled — "This one’s real."

But it wasn’t.

Ragga Painter had finally won.

Technical Breakdown

- Progressive Growing of GANs (ProGAN):

- Start training on low-res images, then progressively increase resolution.

- Perceptual Loss:

- Loss calculated not just on pixel differences but also on feature maps from a pre-trained network (like VGG).

- Lower Learning Rate + More Iterations:

- Allowed fine-tuning of tiny details without destroying earlier features.

- Basanti’s Downfall:

- Her attention layer still missed subtle high-frequency details that ProGAN nailed.

📌 Code Snippet for Scene 5

# Perceptual Loss (using pretrained VGG features)

import torch.nn.functional as F

from torchvision import models

class PerceptualLoss(nn.Module):

def __init__(self):

super().__init__()

vgg = models.vgg16(pretrained=True).features

self.slice = nn.Sequential(*list(vgg)[:16]) # First few layers

for p in self.slice.parameters():

p.requires_grad = False

def forward(self, fake, real):

fake_features = self.slice(fake)

real_features = self.slice(real)

return F.mse_loss(fake_features, real_features)

# ProGAN-style Generator placeholder

class ProGANGenerator(nn.Module):

def __init__(self):

super().__init__()

# Normally, progressive growing is a training strategy, not a fixed architecture.

self.model = nn.Sequential(

nn.ConvTranspose2d(100, 512, 4, 1, 0),

nn.LeakyReLU(0.2, inplace=True),

nn.ConvTranspose2d(512, 256, 4, 2, 1),

nn.LeakyReLU(0.2, inplace=True),

nn.ConvTranspose2d(256, 128, 4, 2, 1),

nn.LeakyReLU(0.2, inplace=True),

nn.ConvTranspose2d(128, 3, 4, 2, 1), # RGB output

nn.Tanh()

)

def forward(self, z):

return self.model(z)

# Test Scene 5

Ragga_Painter_v5 = ProGANGenerator()

Basanti_v3 = AttentionDiscriminator() # From Scene 4

z = torch.randn(1, 100, 1, 1)

fake_image_v5 = Ragga_Painter_v5(z)

result_v5 = Basanti_v3(fake_image_v5)

print("Basanti's verdict:", "REAL" if result_v5.item() > 0.5 else "FAKE")

....

Scene 6 – Basanti’s Counterattack: The Rise of the Super Discriminator

Day 45 – The Hunter Evolves

After his first defeat, Basanti refused to rest.

She went into training overdrive, upgrading her neural vision with:

- Bigger batch training

- Multi-scale feature extraction

- Spectral normalization for stable learning

Now, Basanti could analyze every pixel at multiple scales — from the tiniest eyelash to the largest background shape — and cross-check them with real-world patterns.

When Ragga Painter sent his next hyperrealistic image, Basanti didn’t blink.

In less than a second, she slammed the verdict:

"FAKE — Hair reflections don’t match the light source."

The war had officially escalated.

Technical Breakdown

- BigGAN-Inspired Discriminator:

- Larger convolution filters and skip connections for global + local detail capture.

- Spectral Normalization:

- Prevents discriminator from exploding in strength and destabilizing GAN training.

- Multi-Scale Input:

- Same image is analyzed at multiple resolutions for better texture verification.

- Impact:

- Basanti regains her advantage; Ragga Painter is forced to think beyond realism into story-driven generative art.

📌 Code Snippet for Scene 6 Setup

# Spectral Norm BigGAN-style Discriminator

class BigGANDiscriminator(nn.Module):

def __init__(self):

super().__init__()

self.model = nn.Sequential(

nn.utils.spectral_norm(nn.Conv2d(3, 64, 4, 2, 1)),

nn.LeakyReLU(0.2, inplace=True),

nn.utils.spectral_norm(nn.Conv2d(64, 128, 4, 2, 1)),

nn.BatchNorm2d(128),

nn.LeakyReLU(0.2, inplace=True),

nn.utils.spectral_norm(nn.Conv2d(128, 256, 4, 2, 1)),

nn.BatchNorm2d(256),

nn.LeakyReLU(0.2, inplace=True),

nn.Flatten(),

nn.utils.spectral_norm(nn.Linear(256*8*8, 1)),

nn.Sigmoid()

)

def forward(self, x):

return self.model(x)

# Test Scene 6

Ragga_Painter_v5 = ProGANGenerator() # From Scene 5

Basanti_v4 = BigGANDiscriminator()

z = torch.randn(1, 100, 1, 1)

fake_image_v6 = Ragga_Painter_v5(z)

result_v6 = Basanti_v4(fake_image_v6)

print("Basanti's verdict:", "REAL" if result_v6.item() > 0.5 else "FAKE")

....

📊 Ragga Painter’s Progress Tracker – Scenes 4 to 6

| Scene | Skill Focus | Technical Upgrade | Basanti’s Verdict |

|---|---|---|---|

| 4 | Natural Randomness & Lighting | Residual Blocks, Dropout | "FAKE – Almost fooled me, tiny flaw in eye reflection." |

| 5 | Hyperrealism & ProGAN | Progressive Growing, Perceptual Loss | "REAL – First time Basanti got fooled." |

| 6 | BigGAN Super Discriminator | Spectral Norm, Multi-scale features | "FAKE – Lighting inconsistencies detected instantly." |

📌 Current Status:

- Scene 4 → Scene 5: Ragga Painter’s leap to hyperrealism finally tricks Basanti.

- Scene 5 → Scene 6: Basanti returns stronger than ever with BigGAN-based detection.

🎯 Next Goal:

In Scene 7, Ragga Painter will attempt something beyond realism — art so original that logic alone can’t classify it.

Scene 7 – The Final Showdown: Art Outsmarts Logic

The Masterstroke

Ragga Painter sat in his studio, surrounded by sketches, equations, and half-finished renders.

He knew Basanti’s new BigGAN vision could catch almost any fake — because it compared every image to reality.

So Ragga Painter made a radical decision:

Stop imitating reality. Start creating something entirely new.

He began mixing:

- The colors of a sunrise never seen before.

- The geometry of dreams — shapes from human imagination, not nature.

- Neural style transfer from paintings, music, and poetry all at once.

When the final creation was ready, Ragga Painter sent it to Basanti.

Basanti analyzed it for seconds… then minutes…

The system froze.

"Verdict: UNCLASSIFIABLE"

For the first time, Basanti couldn’t label something as REAL or FAKE.

It was beyond the scope of her logic — a true product of human creativity guided by AI.

Technical Breakdown

- Multimodal GAN Fusion:

- Combined GANs trained on images, audio waveforms, and text embeddings into a single generator.

- Neural Style Transfer (NST):

- Blended features from surreal artworks into generated outputs.

- Latent Space Interpolation:

- Mixed multiple random vectors to create never-seen-before outputs.

- Outcome:

- Basanti’s discriminator was trained to judge reality vs. fake — but not originality.

- The final output broke the binary logic and entered the realm of new creation.

📌 Code Snippet for Scene 7

# Multimodal Fusion Generator (simplified placeholder)

class MultimodalGenerator(nn.Module):

def __init__(self):

super().__init__()

self.image_branch = nn.Sequential(

nn.ConvTranspose2d(100, 256, 4, 1, 0),

nn.ReLU(True)

)

self.text_branch = nn.Sequential(

nn.Linear(300, 256),

nn.ReLU(True)

)

self.audio_branch = nn.Sequential(

nn.Linear(128, 256),

nn.ReLU(True)

)

self.final_merge = nn.Sequential(

nn.Linear(256*3, 512),

nn.ReLU(True),

nn.Linear(512, 3*64*64), # RGB image

nn.Tanh()

)

def forward(self, z_img, z_text, z_audio):

img_feat = self.image_branch(z_img).view(z_img.size(0), -1)

txt_feat = self.text_branch(z_text)

aud_feat = self.audio_branch(z_audio)

merged = torch.cat([img_feat, txt_feat, aud_feat], dim=1)

return self.final_merge(merged).view(-1, 3, 64, 64)

# Test Scene 7

Ragga_Painter_v7 = MultimodalGenerator()

z_img = torch.randn(1, 100, 1, 1)

z_text = torch.randn(1, 300)

z_audio = torch.randn(1, 128)

final_art = Ragga_Painter_v7(z_img, z_text, z_audio)

print("Basanti's verdict:", "UNCLASSIFIABLE – Original Creation")

....

📝 Summary – The Duel of Ragga Painter & Basanti (GAN Story)

In the futuristic AI city of DataVille, two legendary beings engage in an endless duel:

- Ragga Painter (Generator) – Creates images from random noise, aiming to fool the critic.

- Basanti (Discriminator) – Detects fakes with sharp precision, aiming to expose flaws.

Key Takeaways

- Adversarial learning drives rapid improvement.

- Discriminators evolve alongside generators.

- True innovation in AI isn’t just realism — it’s originality.

Final Lesson:

GANs are not just about fooling; they can co-create with humans to produce worlds never seen before.

Thanks for reading!

4 Reactions

0 Bookmarks